Building an AI-Powered Venue Research Multi-Agent System with CrewAI

Building an AI-Powered Venue Research Multi-Agent System with CrewAI

Introduction

AI Agents are the latest trend in the race to AGI. What is captivating about building AI agents is the potential to automate all kinds of work. The idea to build a venue research multi-agent system came to me when my friend, who runs a tech mixer event mentioned all of the work that he has to do finding venues.

This felt like a great opportunity to get hands-on experience with AI agents. Now, I will share how I built the system with you. This tutorial shows how to use CrewAI to create multi-agent agents that search, evaluate, and reach out to potential event venues.

You can find the complete source code for this project on GitHub:

- Repository: venue-score-flow

- Live Demo: Event Planning Research Assistant

The Challenge

Manual venue research is time-consuming and often inconsistent. Event planners spend countless hours searching across platforms like PartySlate and Peerspace, evaluating venues, and reaching out to vendors. In this tutorial, I will show you how to automate this entire workflow using CrewAI's multi-agent architecture.

This solution has the potential to help event planners:

- Reduce venue research time from days to minutes

- Ensure consistent venue evaluation across platforms

- Automate personalized vendor outreach

- Enable less experienced team members to manage venue searches effectively

Try it out: https://event-planning-research-assistant.streamlit.app/

Try it out: https://event-planning-research-assistant.streamlit.app/

Building with CrewAI

Step 1: Setting Up the Pydantic Models

First, let's define our data models using Pydantic. These models will help us maintain type safety and data validation throughout our application:

from pydantic import BaseModel, Field

from typing import List, Dict, Optional

from datetime import datetime

class InputData(BaseModel):

"""User input data for venue search"""

location: str

radius: int

event_info: str

sender_name: str

sender_company: str

sender_email: str

class Venue(BaseModel):

"""Represents a venue found during search"""

id: str = Field(default_factory=lambda: str(uuid.uuid4()))

name: str

address: str

description: str

website: Optional[str] = None

phone: Optional[str] = None

email: Optional[str] = None

created_at: datetime = Field(default_factory=datetime.now)

class VenueScore(BaseModel):

"""Venue evaluation scores and reasoning"""

name: str

score: int # 1-100

reason: str

created_at: datetime = Field(default_factory=datetime.now)

class ScoredVenues(BaseModel):

"""Combined venue data with scores"""

id: str

name: str

address: str

description: str

website: Optional[str] = None

phone: Optional[str] = None

email: Optional[str] = None

score: int

reason: str

created_at: datetimeThese models serve different purposes in our workflow:

InputData: Captures user requirements and contact informationVenue: Stores raw venue data from our search resultsVenueScore: Contains the AI agent's evaluation of each venueScoredVenues: Combines venue information with its evaluation score

Step 2: Setting Up the Agent Architecture

The system uses three specialized crews, each handling a different aspect of the venue research process:

- Venue Search Crew - Discovers potential venues

- Venue Score Crew - Evaluates venues based on criteria

- Venue Response Crew - Generates outreach emails

Here's how to create your first crew:

@CrewBase

class VenueSearchCrew:

@crew

def crew(self) -> Crew:

return Crew(

agents=[self.location_analyst()],

tasks=[self.analyze_location()],

process=Process.sequential,

verbose=True,

)Step 3: Implementing the Workflow

The workflow uses decorators (learn more about Python decorators here) to manage the sequence of operations. Here's how it works:

class VenueScoreFlow(Flow):

def __init__(

self,

openai_key: str,

serper_key: str,

input_data: InputData,

):

super().__init__()

self.openai_key = openai_key

self.serper_key = serper_key

self.state.input_data = input_data

@start()

async def initialize_state(self) -> None:

"""Initialize the flow state and set up API keys"""

print("Starting VenueScoreFlow")

os.environ["OPENAI_API_KEY"] = self.openai_key

os.environ["SERPER_API_KEY"] = self.serper_key

@listen("initialize_state")

async def search_venues(self):

"""Search for venues after initialization"""

print("Searching for venues...")

search_crew = VenueSearchCrew()

result = await search_crew.crew().kickoff_async(

inputs={"input_data": self.state.input_data}

)

# Parse and store venues in state

venues_data = json.loads(result.raw)

for venue_data in venues_data:

if isinstance(venue_data, dict):

try:

venue = Venue(**venue_data)

self.state.venues.append(venue)

except ValidationError as e:

print(f"Validation error: {e}")The workflow is managed through several key components:

-

Flow Initialization

- The

@start()decorator marks the entry point - Sets up required API keys and initializes state

- Triggers the first step in the workflow

- The

-

Event Listeners

@listen()decorators create a chain of dependent tasks- Each listener waits for completion of previous tasks

- Ensures tasks execute in the correct order

-

Crew Management

- Each major task is handled by a specialized crew

- Crews are instantiated and executed asynchronously

- Results are stored in the shared state

Here's how the scoring crew is implemented:

@listen(or_(search_venues, "score_venues_feedback"))

async def score_venues(self):

"""Score venues after search or feedback"""

print("Scoring venues...")

tasks = []

for venue in self.state.venues:

score_crew = VenueScoreCrew()

task = score_crew.crew().kickoff_async(

inputs={

"venue": venue,

"event_info": self.state.input_data.event_info

}

)

tasks.append(task)

# Process all venues concurrently

venue_scores = await asyncio.gather(*tasks)

self.state.venue_score = [

score for score in venue_scores

if score is not None

]The workflow follows this sequence:

- Initialize state and API keys

- Search for venues using the search crew

- Score venues using the scoring crew

- Generate emails using the response crew

Each step waits for the completion of its dependencies, ensuring data consistency throughout the process.

Enhancing the System

Here are some ways to extend the basic implementation:

-

Add Human Feedback

- Implement feedback loops for venue scoring using crew.train()

- Allow manual review of generated emails

- Enable custom scoring criteria

-

Improve SearchResults

- Integrate reasoning models such as OpenAI's o1 or o1 preview for venue recommendations. Just be sure to pay attention to the cost of this model.

- Consider updating the Crew design to include more sophisticated agents.

- Implement historical performance tracking

-

Evaluate the results

- Consider using Langtrace to evaluate the results of the crew.

The Solution

The venue research application provides a simple web interface to automate venue discovery and outreach. Here's how to use it:

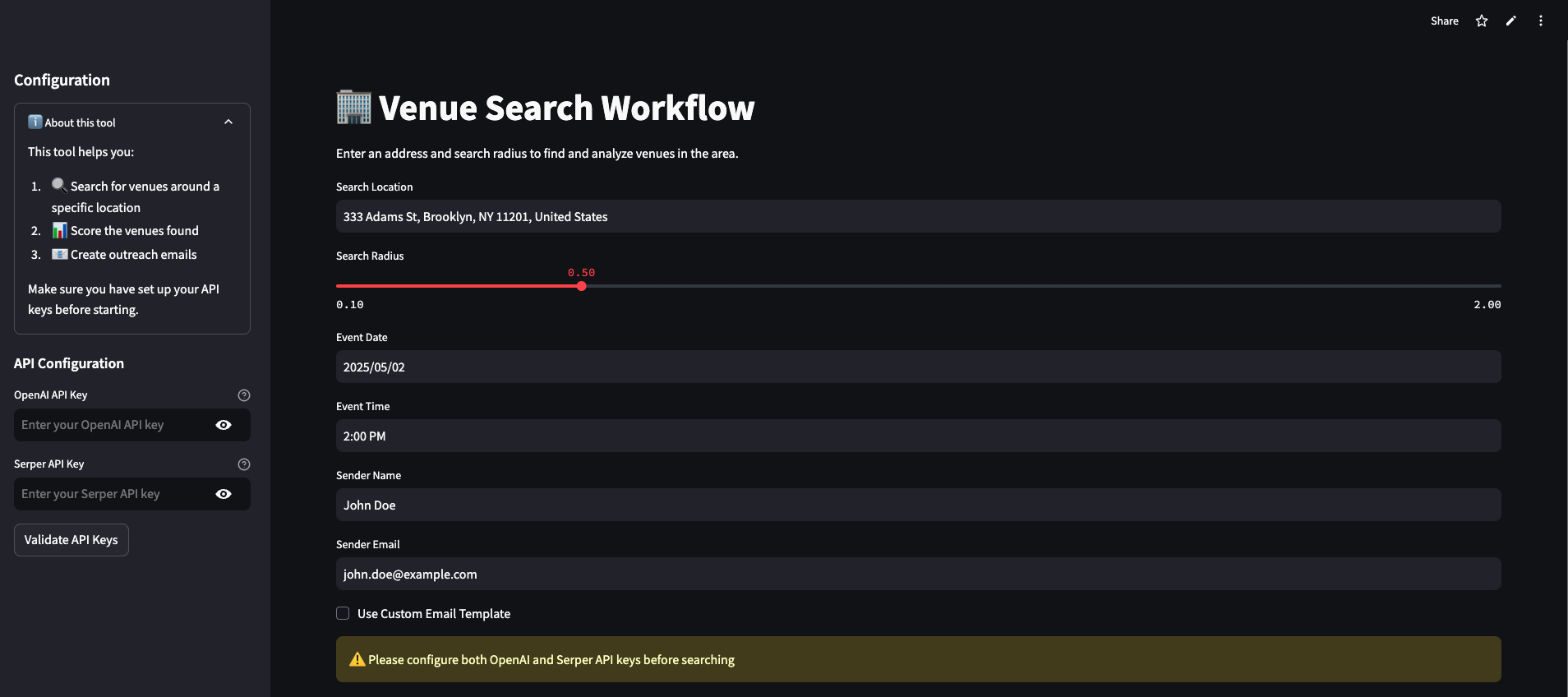

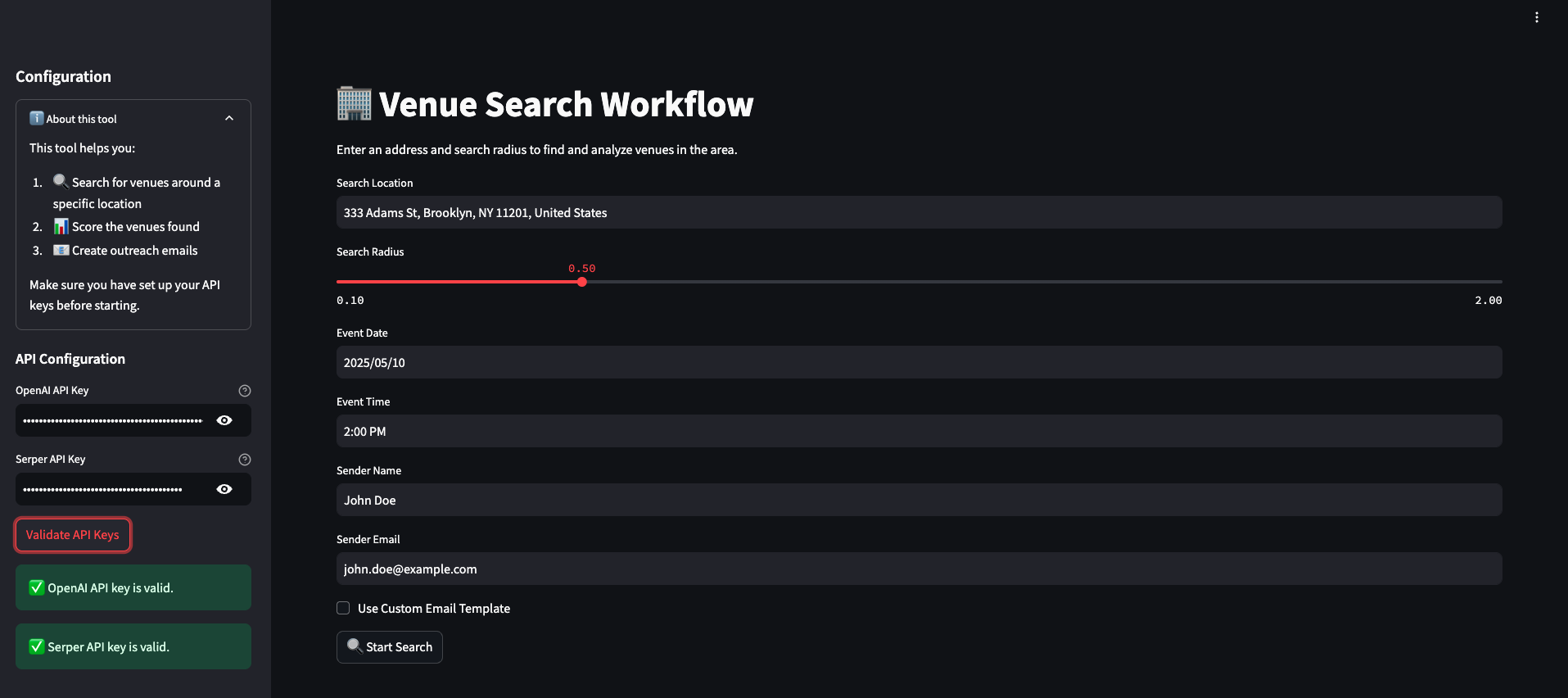

Step 1: Configure API Keys

- Get your API keys:

- OpenAI API key from OpenAI Platform

- Serper API key from Serper.dev

- Enter both API keys in the sidebar

- Click "Validate API Keys" to ensure they work

Step 2: Enter Search Parameters and Custom Email Template

-

Location Details:

- Enter the city or area where you want to find venues

- Specify search radius in miles (e.g., 5, 10, 20)

-

Event Information:

- Describe your event details:

- Event date

- Event time

- Describe your event details:

-

Contact Information:

- Your full name

- Company name

- Professional email address

-

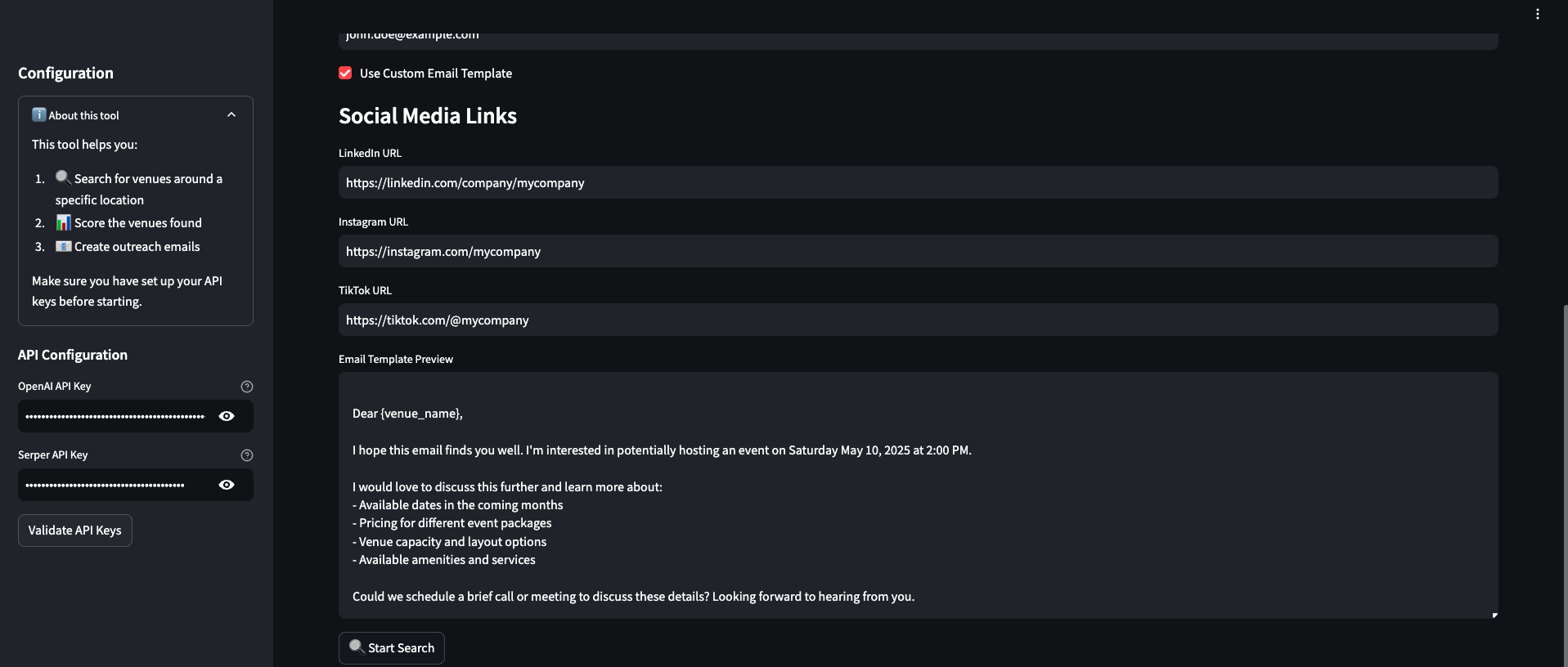

Click "Use Custom Email Template" to use a custom email template

- Social Media Links

- Linkedin Url

- Instagram Url

- TikTok Url

- The system generates a default email template if this is not checked

Step 3: Run the Search

- Click "Start Venue Search" to begin the process

- The system will:

- Search for venues matching your criteria

- Evaluate each venue's suitability

- Generate personalized outreach emails

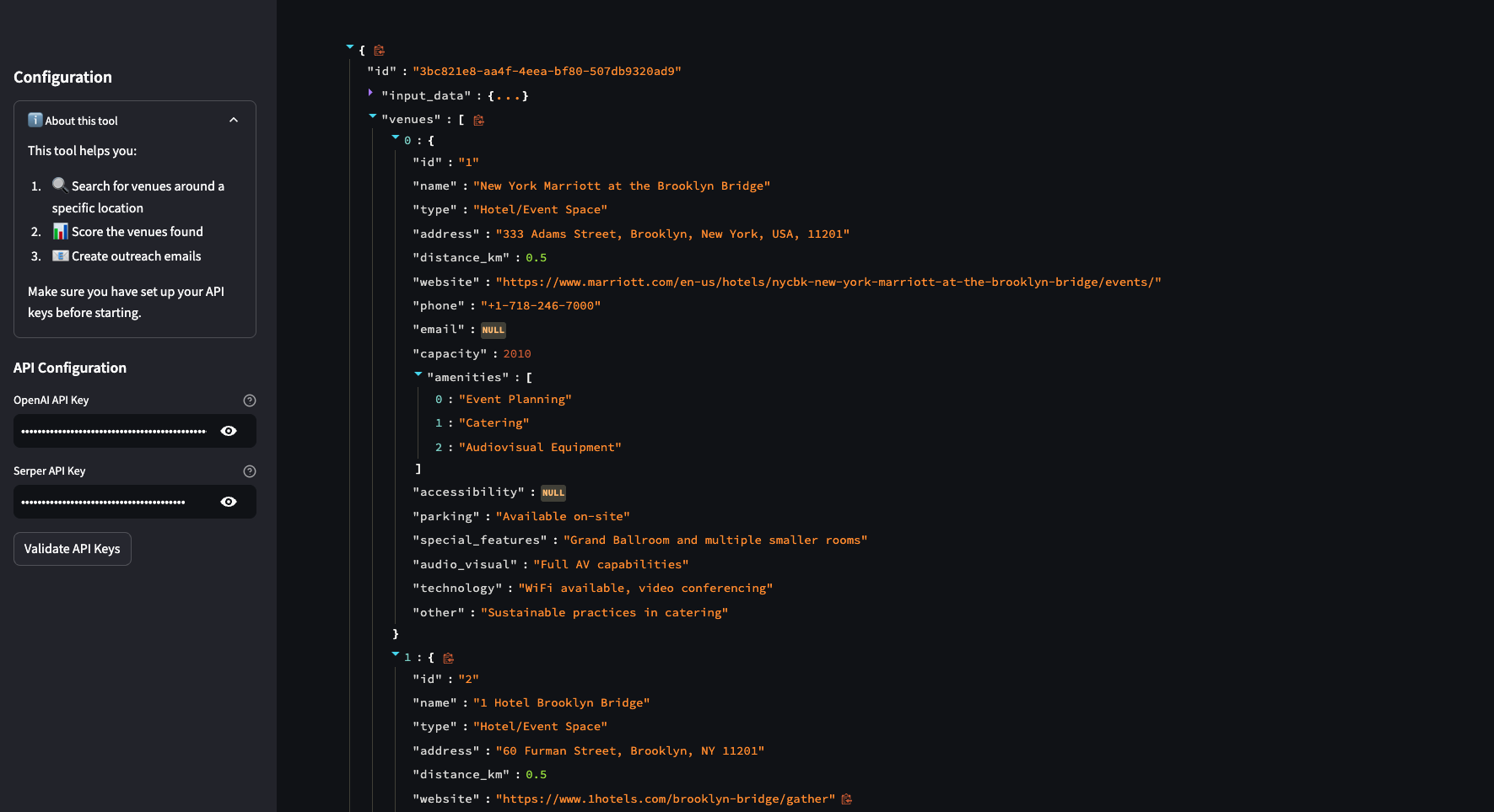

Step 4: Review Results

- View the Venue List:

- Each venue includes details like location, capacity, and amenities

- Review AI-generated scores and reasoning

- Check Generated Emails:

- Review personalized outreach emails for each venue

- Emails are saved in the email_responses directory

- Edit as needed before sending

Tips for Best Results

- Include all important requirements upfront

- Review and customize generated emails before sending

- Use the feedback option if venue scores need adjustment

Try it yourself: Event Planning Research Assistant

Conclusion

Hopefully, this tutorial provided some clarity into building multi-agent systems using CrewAI and did not confuse you. Overall, the best way to learn is through application and building a multi-agent venue research system with CrewAI doesn't have to be complicated, especially since there are [examples] (https://github.com/crewAIInc/crewAI-examples/tree/main) with different multi-agent architectures to learn from.